Project Overview

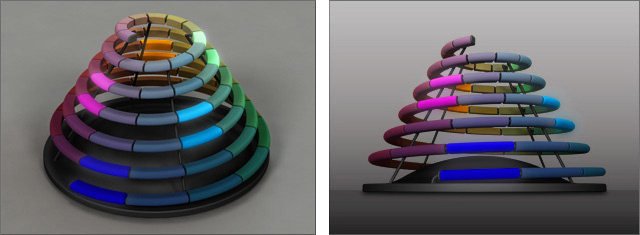

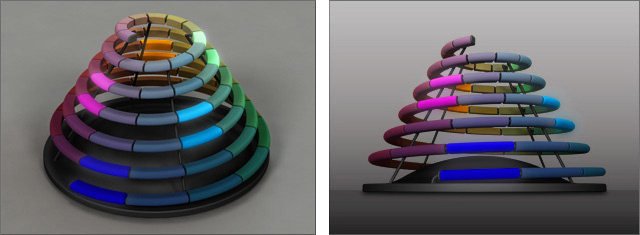

Building on the works of Wassily Kandinsky and Oskar Fischinger, this project explores visualizing sound as individual colored notes in an organized spiral form. The project began with the discovery of the spiral form, and was developed into a screen based interactive program and physically controlled interactive table-top device.

Part 1 – Discovery of the Spiral Form

While trying to find a way to optimally visualize the relationships between musical notes, the discovery was made of a spiral arrangement; not only did the spiral represent the tonal changes between adjacent notes, but it also revealed the relationship between the same note in different octaves.

Part 2 – Sound Spiral Animation

A hypothesis was made that harmonic music would create visual geometric patterns such as triangles and squares within the sound spiral. To explore this, Beethoven’s Moonlight Sonata was digitally encoded and accompanied by an animation in which each note was illuminated at the same moment it was heard.

Part 3 – Interactive Program

A live interactive version of the sound spiral was created using Processing.

Watch a video that demonstrates the program, or follow the link to the actual program.

Link to the LIVE INTERACTIVE PROGRAM.

Part 4 – Interactive Physical Prototype

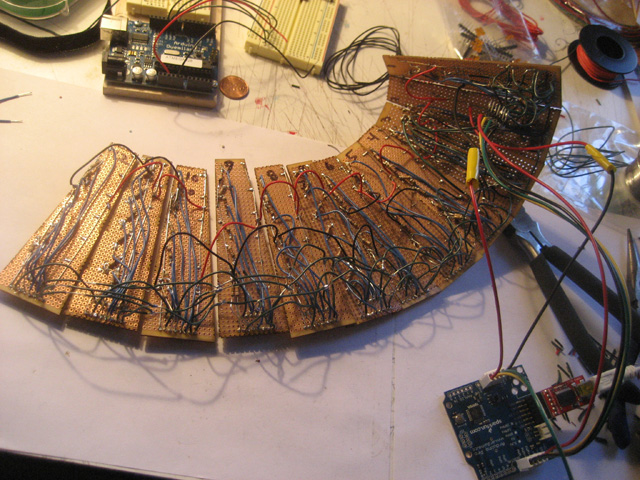

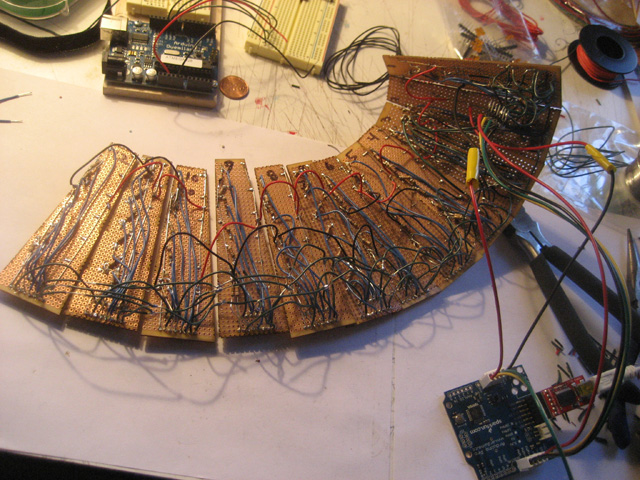

A physical prototype of the sound spiral was built using the Arduino platform. An array of reed switches were used to activate each individual note, and a magnetic wand was implemented to trigger each switch. The signals were sent from the Arduino to the Processing program, allowing a user to play the sound spiral with their hands, rather than just the mouse.

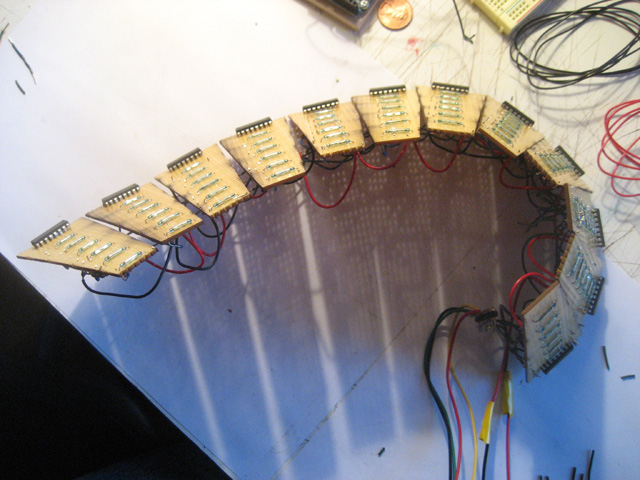

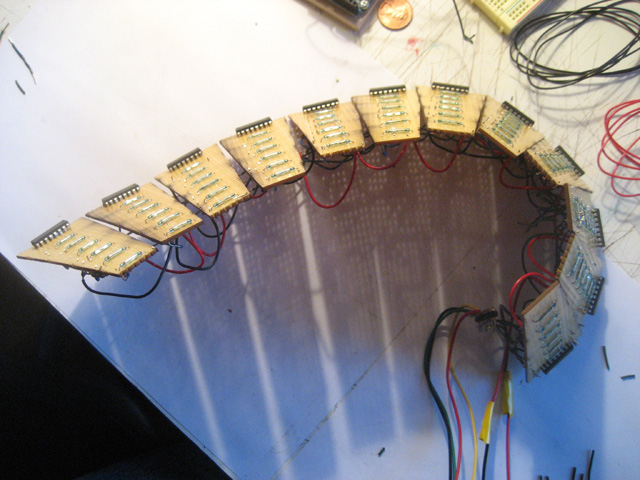

12 individual panels were custom made and hand soldered.

The panels were assembled together into the spiral form.

Video Demonstration of the device connected to a laptop running the Processing sketch.

Video Demonstration of chord mode and wand interface.

Project Overview

We, as inventors, create machines that make our lives easier. We as end-users expect machines to serve us. But what if a machine was created merely to toy with our human emotion?

Meet Closer: a playful robot that attracts your attention, but taunts and teases you with its evasive behavior.

A collaborative project between Melissa Clarke, Noah King, and Michelle Temple.

Part 1 – Concept Ideation

This project was done as an assignment in Dan O’Sullivan’s Intro to Physical Computing course. The project intent was to create a physical interface that is used to manipulate media. During our first group meeting, we selected the direction of an interactive repulsive object. Each of us was fascinated by the attraction and repulsion of magnets, and felt that there was great potential to use this type of interaction to engage a user in a physical way.

Pushing the boundaries of the assignment, we were very excited to rethink the nature of a media controller. Rather than a user utilizing an interface to control their media, we wanted to transform the user to become the media and create a machine that manipulated the user. We desired to make a robot that acted like a cat: first it would attract people to it for personal attention and physical contact, but later it would become aggressive and evasive, leaving the person feeling deserted and defeated. As we embarked to create a human-robot interaction that was both emotionally complex and unconventional, the concept of Closer emerged.

Part 2 – Creating Movement

Our first step was to build a simple toy car and try to get it to move. We explored using gear motors, constructed a working model, and were successful in turning our enthusiasm into actual, measurable movement.

Part 3 – Remote Control

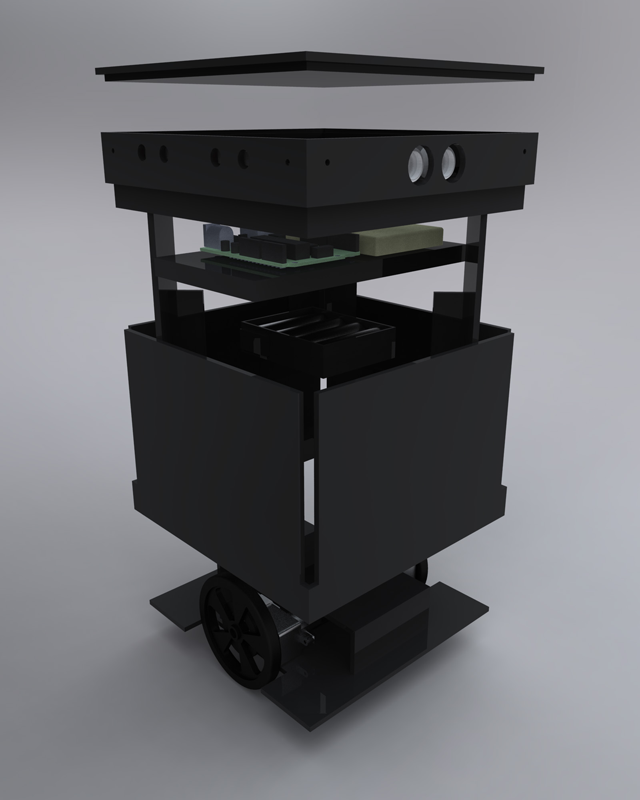

A two-wheeled mockup was built that cut the robot loose from the computer. A lightweight chassis was loaded up with the micro-controller, a battery pack, and the motor/wheel assembly. An accelerometer was attached to the micro-controller and a program was written so that tipping a spoon would control whether the bot moved forward, backward, or turned.

Part 4 – Evasion Algorithm

Processing was used to quickly test and develop an evasion algorithm for the robot. Employing a six-sensor array, the robot could sense objects from multiple directions. Because the robot was best at moving forward and backward or rotating, we designed the evasive behavior around this constraint. When an object was sensed, the robot would rotate itself until it’s orientation parallel with the object, and then it would proceed to move itself further away from the object in a straight line. The Processing code was ported into Arduino and the updated robot was ready to test.

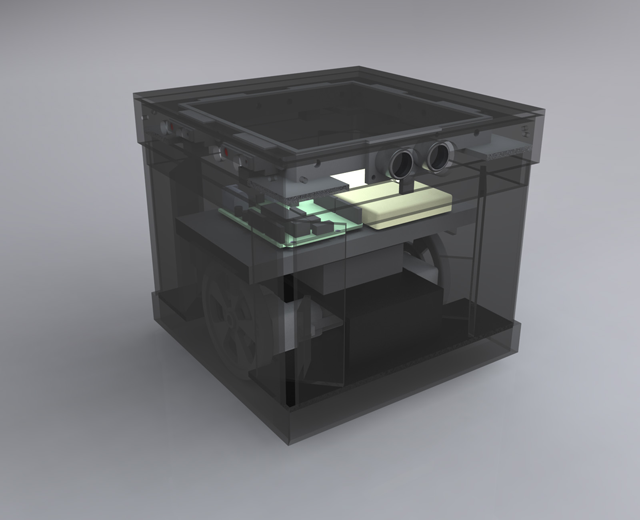

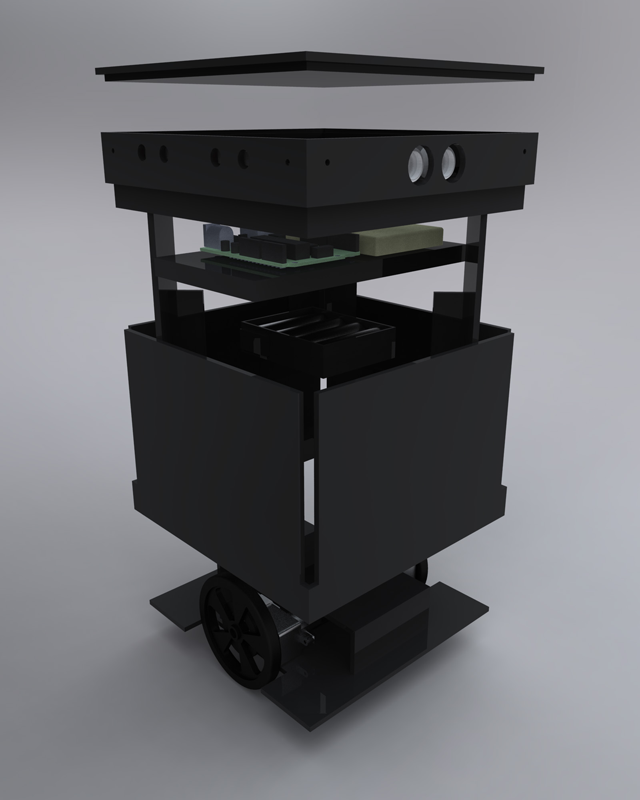

Part 5 – Working Prototypes

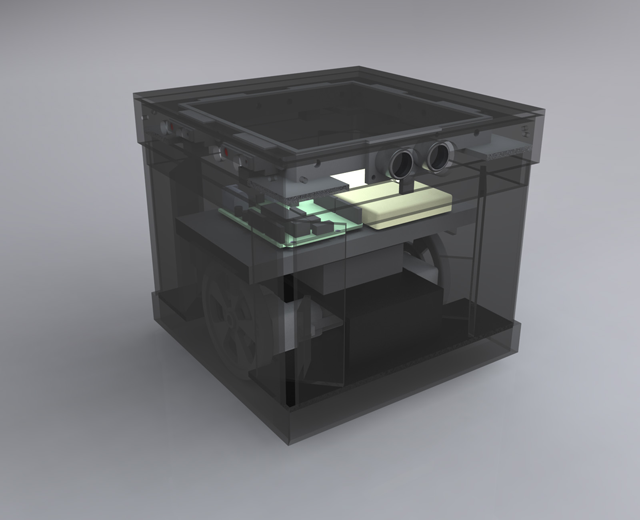

An initial cardboard model was used to mount the sensors to the chassis and test the robot’s behavior. Next, 3d Solidworks models were built to design an elegant enclosure for the robot. Lasercut acrylic panels were assembled and a full-fledged prototype was buit.